The Age-Old Promise Has Been Broken

For centuries, human civilization has operated on a simple agreement: what you see with your own eyes and hear with your own ears can be trusted. This fundamental belief has shaped courtrooms, journalism, relationships, and democracy itself. Video evidence sent criminals to prison. Audio recordings exposed corruption. Photographs documented history.

That agreement is now shattered.

We have entered an era where artificial intelligence can fabricate anyone saying anything, doing anything, at any time. And here is the uncomfortable truth: most people are not ready to face — your eyes and ears have become unreliable witnesses. The very senses you have trusted your entire life can now be weaponized against you.

The Numbers Behind the Crisis

This is not a distant, theoretical threat. It is happening right now, at a scale that should alarm anyone paying attention.

Deepfake fraud cases surged by 1,740 percent in North America between 2022 and 2023. Financial losses from deepfake-enabled fraud exceeded $200 million in just the first quarter of 2025 alone. According to Signicat research, deepfake fraud attempts have grown by an astonishing 2,137 percent over the last three years.

The barrier to creating convincing fakes has completely collapsed. Voice cloning now requires just 20 to 30 seconds of audio. Convincing video deepfakes can be created in 45 minutes using freely available software. A 2024 McAfee study found that one in four adults has already experienced an AI voice scam, with one in ten having been personally targeted.

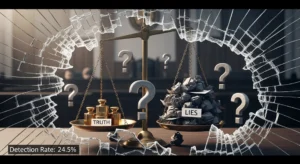

Perhaps most disturbing is this statistic: human detection rates for high-quality video deepfakes hover around just 24.5 percent. We are barely better than random guessing when it comes to identifying fabricated reality.

When Executives Become Digital Puppets

Consider what happened to engineering firm Arup in early 2024. A finance worker received what appeared to be a routine video call. On the screen were familiar faces — the company’s chief financial officer and several senior executives, all discussing an urgent confidential acquisition. The employee authorized 15 transfers totaling $25.5 million.

Every single person on that call, except the victim, was an AI-generated deepfake.

This was not a glitch in the system. This was not a one-off incident. Fraudsters attempted to impersonate Ferrari CEO Benedetto Vigna through AI-cloned voice calls that perfectly replicated his southern Italian accent. Similar attacks have targeted WPP CEO Mark Read and numerous executives across industries. CEO fraud now targets at least 400 companies per day.

According to the World Economic Forum, current detection systems experience a 45 to 50 percent accuracy drop when confronting real-world deepfakes compared to laboratory conditions. Human ability to identify deepfakes hovers at just 55 to 60 percent — barely better than flipping a coin.

The Crisis Runs Deeper Than Fraud

But here is where the truth gets even more uncomfortable. Financial fraud, while devastating, represents only the surface of this crisis. What deepfakes are really attacking is something far more fundamental — our collective ability to know anything at all.

Researchers at UNESCO have articulated this precisely: we are not merely facing a crisis of disinformation — we are facing a crisis of knowing itself. Deepfakes do not just introduce falsehoods into our information ecosystem. They erode the very mechanisms by which societies construct shared understanding.

The Double Bind of Disbelief

There is a phenomenon called the “liar’s dividend” that makes this situation even more insidious. Once people become aware that deepfakes exist, they gain the ability to dismiss authentic recordings as probable fakes. This creates a double bind where neither belief nor disbelief in evidence can be justified.

Think about what this means. A politician caught on video making inflammatory statements can simply claim the footage is AI-generated. A corporation exposed for wrongdoing can dismiss authentic evidence as fabricated. Anyone with something to hide now has a built-in escape hatch.

Real videos get accused of being deepfakes. Deepfakes are believed to be real. The ground beneath our shared reality is dissolving.

The Erosion of Trust Infrastructure

Democracy depends on what scholars call “informational trust” — the reasonable expectation that what we see and hear has some connection to reality. Without this trust, civic cooperation becomes impossible. Voters cannot make informed decisions. Courts cannot weigh evidence. Journalism loses its power to hold institutions accountable.

The increasing prevalence of fake videos is undermining what we know to be true. As one PLOS One study documented, deepfakes can lead to false beliefs, undermine the justification for true beliefs, and prevent people from acquiring true beliefs at all.

The Psychology of a Fabricated Reality

Social media makes everything worse through what psychologists call the “illusory truth effect.” When we see information repeated — whether true or false — it begins to feel more credible simply because it is familiar. Survey data across eight countries shows that prior exposure to deepfakes actually increases belief in misinformation. This effect persists regardless of cognitive ability.

You cannot simply think your way out of this problem. Intelligence offers no protection when your fundamental sensory inputs have been compromised.

We are approaching what researchers call a “synthetic reality threshold” — a point beyond which humans can no longer distinguish authentic from fabricated media without technological assistance. Detection tools consistently lag behind creation technologies in what has become an unwinnable arms race.

What This Means for Your Life

The implications extend far beyond corporate fraud and political manipulation. Consider how deepfakes could affect your personal life:

Relationships and Trust

Anyone with access to basic technology could fabricate evidence of infidelity, create fake communications, or manufacture “proof” of things that never happened. The foundation of personal relationships — trust in what we witness — becomes unstable.

Professional Reputation

A fabricated video could show you saying things that destroy your career. Even if eventually debunked, the damage often cannot be undone. In a world where anyone can be depicted doing anything, reputation becomes terrifyingly fragile.

Legal Vulnerability

Courts have traditionally treated video and audio evidence as highly reliable. That assumption is now dangerous. Innocent people could be convicted based on fabricated evidence, while guilty parties escape accountability by claiming authentic recordings are fake.

The Hard Truth About Solutions

Here is where we must be brutally honest: there is no technological silver bullet coming to save us.

Detection tools exist, but they are playing an endless game of catch-up. Some systems claim 99 percent accuracy, but those claims often exclude sophisticated or newly developed deepfakes. The effectiveness of defensive AI detection tools drops by 45 to 50 percent when used against real-world deepfakes outside controlled laboratory conditions.

The combined human-AI detection approach shows promise, achieving up to 98 percent accuracy in some studies. But this requires significant resources and cannot scale to verify the billions of pieces of media we encounter daily.

What Actually Helps

Adapting to this new reality requires fundamental changes in how we process information:

Verify Before Trusting: The old model of trusting visual evidence by default must be reversed. Approach all media with appropriate skepticism, especially content that provokes strong emotional reactions or confirms existing biases.

Seek Multiple Sources: Any single piece of media evidence should be corroborated through independent channels. If you cannot verify something through multiple unrelated sources, treat it as unconfirmed.

Question Emotional Manipulation: Deepfakes are often designed to provoke outrage, fear, or excitement. When content triggers strong emotions, that is precisely when you should slow down and verify.

Accept Uncertainty: We must become comfortable with saying “I do not know” and “this cannot be verified.” Certainty based on visual evidence alone is no longer justified.

The Uncomfortable Path Forward

The deepfake crisis forces us to confront a painful reality about modern existence. We have built a civilization on the assumption that seeing is believing. That assumption served us well for millennia. It no longer holds.

This does not mean we should descend into paranoid rejection of all media. That response would be equally dangerous, leaving us unable to process legitimate information and making us vulnerable to manipulation in the opposite direction.

Instead, we must develop what might be called epistemic humility — an honest acknowledgment that our senses can deceive us, that evidence requires verification, and that truth in the digital age demands more work than simply watching a video.

Organizations across sectors must redesign their systems to account for this new reality. As we have explored before, authenticity matters more than ever in an AI-saturated world — but now we must also acknowledge that determining what is authentic has become genuinely difficult.

The truth hurts. And in the deepfake era, it has become harder than ever to find. But acknowledging that uncomfortable reality is the first step toward developing the tools, skills, and systems we need to navigate it.

Your eyes and ears are no longer reliable witnesses. The question now is: what will you do about it?